- Joined

- Mar 3, 2013

- Messages

- 1,855

Rep Bank

$2,645

$2,645

User Power: 162%

I find all this "AI" hoopla to be surreal. The only thing really different between now and the late 1980s is that there are more things being tracked, and processors capable of parallel computation are much more common. I don't see where all this "AI" crap is coming from, but I expect it to not end well. Another one of these:

AI winter - Wikipedia

"In the history of artificial intelligence, an AI winter is a period of reduced funding and interest in artificial intelligence research.[1] The term was coined by analogy to the idea of a nuclear winter.[2] The field has experienced several hype cycles, followed by disappointment and criticism, followed by funding cuts, followed by renewed interest years or decades later."

IMHO, in the world of AI there are basically three eras: 1) Perceptron Era, 2) Expert System Era, 3) Multi-Layer Perceptron Era ("Neural Network").

Perceptrons were connectionist systems modeled on the human eye, and could be trained to solve simple problems - but not complex problems (XOR problems). They could tell you if A was true, if B was true, if A or B was true, but NOT if A was true and B was not true.

Two a-holes named Minsky and Papert wrote a book in 1969 called "Perceptrons" that exposed their weakness with XOR problems, and all the government's R&D money (for the next 20 years) went to the a-holes who were pushing rule-based expert systems. Perceptrons were LEARNING systems, while expert systems vomited out expertise CODED by a subject-matter expert.

In 1986, a really cool guy named Rumelheart (and his friends) developed a multi-layer Perceptron that COULD solve the XOR problem, and they provided an algorithm ("back-prop") that allowed the system to learn properly. This freed all the AI users from hard-coding expertise in to programs, and switch to self-learning systems. A year after this paper came out, Minksy and Papert were booed at an AI conference.

After 1986 there were other important developments, but they have been mostly improvements to the idea of the MLP. The MLP training algorithm uses some Calculus formulas, which where a pain. Genetic Algorithms were invented to allow for the dead-simple calculation/evolution of the neural weights (connection strengths). Other natural-intelligence inspired algorithms came out, Swarm Theory, Ant Colony Optimization, Simulated Annealing, etc. I group all these in the "MLP Era".

How do you know a system can be trusted? Anyone who has dealt with self-learning trading systems is familiar with this. It turns out, obviously enough, the more samples of your "AI"'s output, the more certainty you can have that it is modeling reality. if you make a system that says someone is going to have a heart-attack within 30 days, and it is right twice, that is one thing. If it is right 200 times and wrong 2 times, that is another thing. To have a high-degree of certainty, you have to have a lot of unique test cases. This is what the world lacks.

We track a lot of stuff, we have WIDE data, but we don't have DEEP data. If two test cases happened at the same TIME, even partially, they are generally not unique. How long has Amazon been tracking customer purchases? When they predict a sale, how far back in the customer's habits do they look? If Amazon looks at your last 2 years of shopping data, how many unique tests will they have if the user joined Amazon 10 years ago? 5 samples. How certain can you be of something with 5 samples? It turns out, not very sure. You need hundreds of samples to train the system, and hundreds of tests to see how well it learned. This DEEP data does not exist, and wont, for a very long time. Of course you can a train a system on many users, but they are still not unique. Maybe a war breaks out and everyone stops shopping. Or a bird virus scare creates a run on surgical masks. Maybe they buy toys, but only before Christmas.

So the learning algorithms have not really changed, and the only data is SHALLOW data. There is nothing there. It's fantasy, but I guess there is going to be a lot of money thrown at this fantasy.

I'm not saying you can't beat RANDOM, or you can't beat someone else, but I don't see any justification for the recent hype around AI. If anything, the Netflix Prize is one of the better real-world examples of AI.

Netflix Prize - Wikipedia

AI winter - Wikipedia

"In the history of artificial intelligence, an AI winter is a period of reduced funding and interest in artificial intelligence research.[1] The term was coined by analogy to the idea of a nuclear winter.[2] The field has experienced several hype cycles, followed by disappointment and criticism, followed by funding cuts, followed by renewed interest years or decades later."

IMHO, in the world of AI there are basically three eras: 1) Perceptron Era, 2) Expert System Era, 3) Multi-Layer Perceptron Era ("Neural Network").

Perceptrons were connectionist systems modeled on the human eye, and could be trained to solve simple problems - but not complex problems (XOR problems). They could tell you if A was true, if B was true, if A or B was true, but NOT if A was true and B was not true.

Two a-holes named Minsky and Papert wrote a book in 1969 called "Perceptrons" that exposed their weakness with XOR problems, and all the government's R&D money (for the next 20 years) went to the a-holes who were pushing rule-based expert systems. Perceptrons were LEARNING systems, while expert systems vomited out expertise CODED by a subject-matter expert.

In 1986, a really cool guy named Rumelheart (and his friends) developed a multi-layer Perceptron that COULD solve the XOR problem, and they provided an algorithm ("back-prop") that allowed the system to learn properly. This freed all the AI users from hard-coding expertise in to programs, and switch to self-learning systems. A year after this paper came out, Minksy and Papert were booed at an AI conference.

After 1986 there were other important developments, but they have been mostly improvements to the idea of the MLP. The MLP training algorithm uses some Calculus formulas, which where a pain. Genetic Algorithms were invented to allow for the dead-simple calculation/evolution of the neural weights (connection strengths). Other natural-intelligence inspired algorithms came out, Swarm Theory, Ant Colony Optimization, Simulated Annealing, etc. I group all these in the "MLP Era".

How do you know a system can be trusted? Anyone who has dealt with self-learning trading systems is familiar with this. It turns out, obviously enough, the more samples of your "AI"'s output, the more certainty you can have that it is modeling reality. if you make a system that says someone is going to have a heart-attack within 30 days, and it is right twice, that is one thing. If it is right 200 times and wrong 2 times, that is another thing. To have a high-degree of certainty, you have to have a lot of unique test cases. This is what the world lacks.

We track a lot of stuff, we have WIDE data, but we don't have DEEP data. If two test cases happened at the same TIME, even partially, they are generally not unique. How long has Amazon been tracking customer purchases? When they predict a sale, how far back in the customer's habits do they look? If Amazon looks at your last 2 years of shopping data, how many unique tests will they have if the user joined Amazon 10 years ago? 5 samples. How certain can you be of something with 5 samples? It turns out, not very sure. You need hundreds of samples to train the system, and hundreds of tests to see how well it learned. This DEEP data does not exist, and wont, for a very long time. Of course you can a train a system on many users, but they are still not unique. Maybe a war breaks out and everyone stops shopping. Or a bird virus scare creates a run on surgical masks. Maybe they buy toys, but only before Christmas.

So the learning algorithms have not really changed, and the only data is SHALLOW data. There is nothing there. It's fantasy, but I guess there is going to be a lot of money thrown at this fantasy.

I'm not saying you can't beat RANDOM, or you can't beat someone else, but I don't see any justification for the recent hype around AI. If anything, the Netflix Prize is one of the better real-world examples of AI.

Netflix Prize - Wikipedia

Dislike ads? Become a Fastlane member:

Subscribe today and surround yourself with winners and millionaire mentors, not those broke friends who only want to drink beer and play video games. :-)

Last edited:

Membership Required: Upgrade to Expose Nearly 1,000,000 Posts

Ready to Unleash the Millionaire Entrepreneur in You?

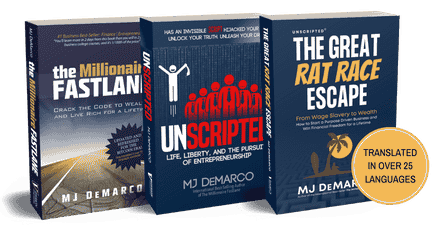

Become a member of the Fastlane Forum, the private community founded by best-selling author and multi-millionaire entrepreneur MJ DeMarco. Since 2007, MJ DeMarco has poured his heart and soul into the Fastlane Forum, helping entrepreneurs reclaim their time, win their financial freedom, and live their best life.

With more than 39,000 posts packed with insights, strategies, and advice, you’re not just a member—you’re stepping into MJ’s inner-circle, a place where you’ll never be left alone.

Become a member and gain immediate access to...

- Active Community: Ever join a community only to find it DEAD? Not at Fastlane! As you can see from our home page, life-changing content is posted dozens of times daily.

- Exclusive Insights: Direct access to MJ DeMarco’s daily contributions and wisdom.

- Powerful Networking Opportunities: Connect with a diverse group of successful entrepreneurs who can offer mentorship, collaboration, and opportunities.

- Proven Strategies: Learn from the best in the business, with actionable advice and strategies that can accelerate your success.

"You are the average of the five people you surround yourself with the most..."

Who are you surrounding yourself with? Surround yourself with millionaire success. Join Fastlane today!

Join Today